PRISM TECH POLICY NEWSLETTER: AI disruption will take years. Calm down.

Dear readers,

Thanks to all the new subscribers! Welcome aboard!

It’s been a pretty notable couple of weeks in tech policy: Another wave of layoffs, the US Department of Justice going after Google’s ad business, Meta reinstating Trump, and so much more.

And yes, of course, there’s more ChatGPT-mania: instantiated most recently by Buzzfeed’s stock jumping 92% after it announced it would use the service to write articles. I am aware that everyone is talking about this, and I hate to add to the noise, but if this is the future of tech, it's worth taking the time. So let’s break it down.

Looking forward to working with many of you soon on where AI is taking us.

– George

THE BIG TAKE

AI disruption will take years. Calm down.

I’ve seen a lot of writing about AI in the last couple of weeks. A LOT.

ChatGPT is obviously the reason… ChatGPT is amazing at writing things. ChatGPT actually isn’t amazing at writing things. ChatGPT is going to change the world. Chat-GPT is actually kinda dumb. ChatGPT is coming for your job. Actually, here's seven reasons why Chat-GPT won’t take your job. And so on.

I promise you I’m not just salty because it passed an MBA exam (I can assure you the exams were not hard, nor the point of doing an MBA). But I am frustrated about the lack of ability to take much away from the volumes of breathless writing about the AI future.

Not to pick on Forbes, but here is an excerpt from a recent article about AI business models:

Humanizing experiences (HX) are disrupting and driving the democratization and commoditization of AI. These more human experiences rely on immersive AI. By 2030, immersive AI has the potential to co-create innovative products and services navigating through adjacencies and double up the cash flow, opposed to a potential 20% decline in cash flow with nonadopters, according to McKinsey.

Huh?

While there is a lot we still don’t know about the future of AI, here are three P’s to organize some takeaways:

Practice: You should be investing in AI, but you have plenty of time to do so, so don’t freak out.

Policy: ChatGPT is a minefield.

Prognostication: There are some real causes for concern.

Practice: this all takes more time than you think

ChatGPT isn’t that good right now. As PRISM’s Head of Product would say: “What is the user problem this is solving?” (Yes, I’ve been listening, Amanda). ChatGPT is a perfect example of this; it’s cool technology that nobody really asked for. It can create a lot of marginally exciting fun in some niche places, but it is of little significant value right now.

But Microsoft just invested $10b in OpenAI, in a frankly very strange deal where they get 75% of OpenAI’s profits until the investment is paid back and then a 49% stake thereafter. That means OpenAI is going to need to make several billion dollars in profit per year quite soon. How? And from whom? While ChatGPT can sell subscriptions, much of the investment in AI seems to be based on “innovation potential”. If the previous era of VC (long live the king) was “Go get users, and we’ll figure out how to make money later”, the new era seems to be “Go make AI and we’ll figure out how to make money later”.

Let’s paint a highly optimistic picture: AI is going to be as transformative as the smartphone, which is probably the most successful product and disruptive technology of the past two decades. Interestingly, the market size of AI in 2022 was almost exactly the size of the smartphone market in 2007, when the iPhone was released and the smartphone idea really caught fire. Current market size forecasts are painting an almost identical market size evolution!

Market size growth - smartphones (base year 2007) vs AI (base year 2022)

So what should you take away from all this?

First, predicting any technology will be as successful and impactful as the smartphone should be approached with caution, especially if we remember last year’s Web3 hype (more on that in a future newsletter).

Second, even if you believe ChatGPT is the iPhone and is kicking us into a new era, all of this takes a lot of time to play out. Blackberry reached its highest ever market share 3 years after the iPhone was released. Product improvements take years to reach the level of quality needed for mass adoption. Consumers take time to change behavior. Businesses are slow and bureaucratic.

Third, this is AI as a whole. Generative AI, which is the part with all the ChatGPT excitement, was estimated at $8b in 2022, growing to $116b by 2030 (PRISM estimate). So the actual thing we’re talking about (ChatGPT) has a potential market impact that could become about one tenth the size of the online ads market (~$600b today and over $1T in 2030) in the next 8 years.

So, while you should be investing in AI, take your time and don’t hit the panic button as if the world is going to rapidly become very different. As Bill Gates famously said: “We always overestimate the change that will occur in the short term and underestimate the change that will occur in the long term.”

Policy: this is going to be a bit of a mess

AI regulation is building quickly. The EU will pass its landmark AI Bill this year. The US is pushing its AI Bill of Rights. Every major country has some kind of AI strategy.

Most regulatory interventions are aimed at transparency at present. The challenges ChatGPT raises, however, are less related to AI regulation and more about how AI-based products will intersect with existing areas of regulation. Transparency can only allow regulators to even start to research and understand these questions. But the risks themselves beg the question of how problems could force regulators to stall the pace of development and deployment. A few examples, and unanswered questions:

Copyright: While ChatGPT says it won’t claim copyright of the outputs it generates, does ChatGPT have the right to access and use the underlying information it’s model is based upon (i.e., basically everything on the internet!)?

Privacy: ChatGPT collects and processes all sorts of data and information. I tested out asking for someone’s address on ChatGPT, and turns out that it’s got some privacy built in! That said, there will inevitably be personal information or other data gathered from breaches, or data that becomes sensitive when processed. What entirely new privacy risks are possible that we’re not even thinking about?

Security: ChatGPT clearly presents a huge risk for drafting and sending phishing and spam emails, and AI as a whole has significant hacking potential. How will people and their tools (like email clients) adapt to this risk? Will new regulations be needed given the massive possibility for harm?

Misinformation: ChatGPT provides some highly suspect answers to certain questions, and currently it is impossible to trace where they come from. It is also adept at making deceiving content seem believable. Combined with discussions of platform liability and content moderation, how will generative AI services that provide information direct to users (e.g. ChatGPT on Bing) conform to regulations?

Radicalization: ChatGPT can mimic content that drives radicalization. Alongside the years of policy work done on addressing online radicalization risk, how does ChatGPT change the game once again? There’s a good paper on this here.

Prognostication: the AI future looks concerning

I am a techno-optimist, so I don’t want to doom-monger, and in general I think the AI future will be better than the non-AI past. But there are real concerns. The longer term is always a guessing game this early on, but in the spirit of the old tech world, here are some if/thens that we should consider:

Information: If generative AI is just going to harvest all the information online to serve up immediate answers to people, then why would people put their information online for free anymore? One of the main use cases people have told me for ChatGPT is writing basic legal contracts. The business model of legal firms putting these online for free is to get customers. They won’t do that any more if ChatGPT takes the contracts and the customers! If this is the future, it could spell the end of wide, free access to information online.

Trust: If generative AI brings the cost of basic information outputs down to zero, then trusted and quality information outputs will become ever more valuable. This will put a huge premium on brand, reputation, and relationships to allow users to distinguish what is reliable vs an AI spewing various formulations of random content.

Economics: If generative AI brings the cost of basic research and certain basic skills to zero, then it will make many companies change how they employ and invest in people. This could create a significant long-term deflationary shock to the global economy akin to China’s accession to the WTO. In a narrower sense, AI could well put the onus back toward physical skills. In other words, you may want to teach your kids plumbing rather than programming.

HMM, INTERESTING

Top 5 - The eye-catching reads

Starting a social network is hard: The FT Alphaville tries to start a Mastodon and finds out all the reasons why it's a nightmare to run a social media network.

The Europeans have digital rights! Three interesting quotes:

1) “What is illegal offline, is illegal online”

2) “Artificial intelligence should serve as a tool for people, with the ultimate aim of increasing human well-being”

3) Member States have called for a model of digital transformation that ensures that technology assists in addressing the need to take climate action and protect the environment.”

January 6th Committee social media report goes after the attention economy: “Regardless of their legal liability, they have an ethical obligation to prevent those services from being used to commit crimes, orchestrate violence, or otherwise contribute to offline harm. This is true whether or not the attention-seeking, algorithmically-driven business model at the core of the social media industry is driving polarization and radicalization.”

Indian government releases BharOS: an “indigenous operating system” aimed at reducing dependence on foreign technology. Adding onto telecoms firms in India (and the EU) increasingly trying to take on the US tech giants, and growing nationalist sentiments, paints a worrying picture for the country that may soon play the growth role in the global economy that China has played in recent decades.

China drafts competition rule updates: the draft includes rules on dark patterns, but the most eye-catching part was banning big-data driven price discrimination - “The Draft AUCL Amendments add a provision prohibiting a business operator from using algorithms to analyze user preferences, trading habits, and other characteristics to implement unreasonable differential treatment or other unreasonable trading conditions. This proposed amendment is in line with the Personal Information Protection Law of the People's Republic of China, which requires that automated decision-making should not result in the unreasonable differential treatment of individuals.”

AND, FINALLY

My top charts of the week

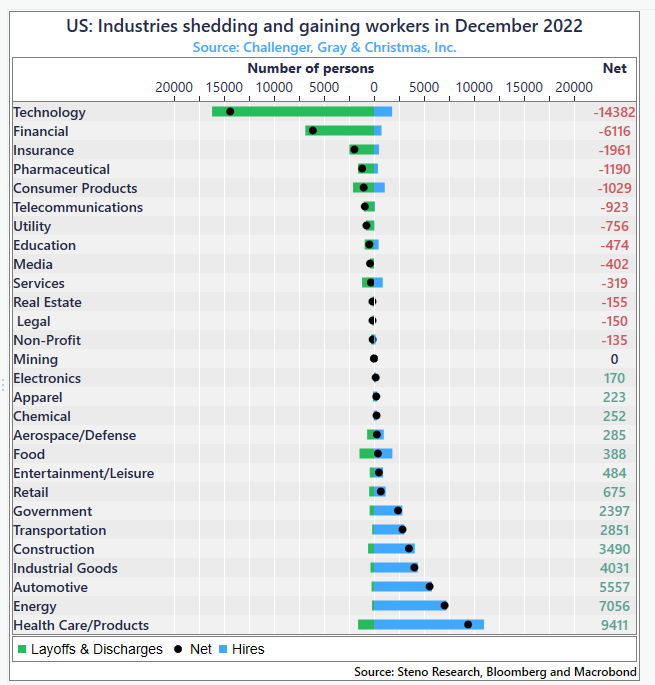

Where to look for jobs right now

Tech layoffs have been massive, but quite localized to the tech sector for now. If you want to look for a booming jobs market, look at healthcare and energy

GSI trumps BRI

China’s Belt and Road Initiative has driven a lot of analysis about Chinese global influence. But it's on the wane, especially compared to newer Chinese security initiatives

Longer history of tech and labor

AI disruptions sit on a decades-long history of labor-saving technology investments. How much further can this fall?